-

Argo Workflows: How to actually run a task in a Workflow

5 min read

When we are creating a Workflow in Argo Workflows, we need to define the tasks that we need to do. These tasks are defined using templates: They are the building blocks of a Workflow, defining how each task will be executed.

31/10/2024

Read more... -

Golang: Using the Garbage Collection tracing

3 min read

Go is a garbage-collected language: It will reclaim any unused memory. This automation can sometimes introduce performance challenges. We can use the GODEBUG environment variable to provide insights into specific internal operations of the Go runtime, such as scheduling, memory allocation, and garbage collection.

30/10/2024

Read more... -

Argo Workflows: Passing artifacts in a multi-step WorkflowTemplate

3 min read

In a Workflow we'll need to be able to share data between the different steps. To do so we can use the

artifactssection in the WorkflowTemplate. This allows us to pass data between steps in a Workflow.29/10/2024

Read more... -

Argo Workflows: Input Parameters in a WorkflowTemplate

2 min read

Having a WorkflowTemplate with input parameters in Argo Workflows allows you to create reusable templates that can be customized at runtime. This is useful when you want to pass dynamic values to the workflow template when you submit it.

Following the example of the WorkflowTemplate that builds and pushes a Docker image using Kaniko, we are going to update it to use input parameters.

28/10/2024

Read more... -

Create a reusable Argo WorkflowTemplate

2 min read

Given a specific Argo Workflow, you can convert it into a reusable Argo WorkflowTemplate. This allows you to reference the template across multiple workflows without repeating it's YAML definition.

25/10/2024

Read more... -

Build and Push Container Images in Kubernetes with Argo Workflows

2 min read

Building container images directly in Kubernetes offers a streamlined and efficient way to manage your containerized applications. Tools like Kaniko allow you to build container images inside Kubernetes Pods. In this post, instead of using other frameworks like Tekton or Shipwright, we'll define our custom pipeline directly with Argo Workflows.

24/10/2024

Read more... -

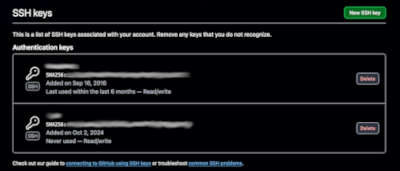

Github: Getting the SHA256 fingerprint of an SSH key

1 min read

When you need to check the SSH key that you are using on your GitHub account, you'll get the SHA256 fingerprint of the key.

Depending on the version of OpenSSH that you are using, the fingerprint you get from using

ssh-keygen -lfmight be different from the one that GitHub shows you.23/10/2024

Read more... -

Getting started with Argo Workflows

6 min read

Argo Workflows is a open-source container-native workflow engine designed to run containerized jobs in Kubernetes clusters, similar to tekton.

It uses DAGs (Directed Acyclic Graphs) or step-based workflows that will run each in a container.

22/10/2024

Read more... -

AWS: An error occurred (Throttling) when calling

2 min read

When working with AWS, one might encounter errors related to throttling, such as:

Error: An error occurred (Throttling) when calling the GenerateServiceLastAccessedDetails operation (reached max retries: 4): Rate exceededThis error occurs when an AWS service has throttled your activity: the number of API requests made for that particular account and region have exceeded the rate limit allowed for that particular service.

10/10/2024

Read more... -

Understanding AWS IAM Permissions Boundaries

3 min read

AWS Identity and Access Management (IAM) is a critical service for managing access to AWS resources. Among its many features, Permissions Boundaries add an extra layer of control ro ensure that users and roles cannot exceed specific permissions, even if somehow can update their IAM policies.

08/10/2024

Read more...